How the pursuit of HD video ruined TV for half a decade

16 September 2020 ·I’ve been watching a lot of early-2000s sci-fi TV recently, and I’ve noticed that just about every show is plagued by a very specific problem.

Take Stargate SG-1 as a pretty typical example. According to an interview with one of its producers1, the first two seasons of the show were shot on 16mm film, and then until season eight the show was shot on 35mm film. It was finished along with the effects compositing in standard definition, of course. For most if its runtime it was a full screen show as well. All this, of course, means that the first seven seasons of the show aren’t that great looking by today’s standards.

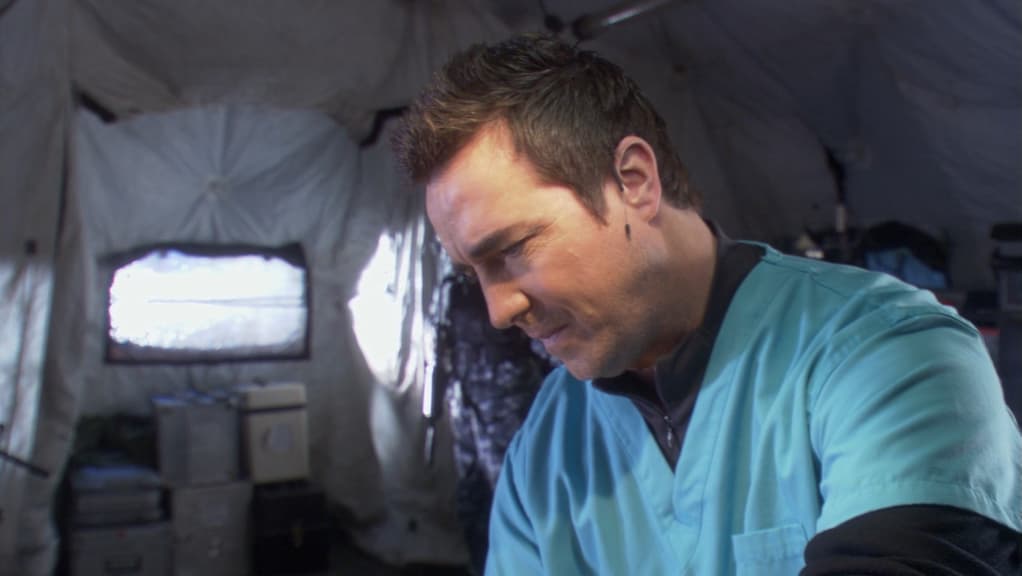

By 2004, however, audiences and networks wanted to see shows in HD, and this meant a different set of expectations and trade-offs. Stargate SG-1 moved to HD digital acquisition for its eight season. The results are, well…

The choice of this image is obviously selective. What’s happening here is that the dynamic range of the scene (the difference in luminance between the darkest part of the image and the brightest part) is too great to be captured by the camera. The highlights are blown out. This scene is one of the worst looking in the show, but as several other shots indicate, the same problems are obvious in many other scenes too.

In addition to frequent blow-out issues, these HD shots suffer from quite a bit of dynamic range compression and loss of detail in the highlights. This is visible as a kind of “pastelization” of bright colors, making them “flatter” looking than they ought to be, even though their saturation is at a normal or even raised level.

While the increase in resolution is obviously appreciated, it also comes with quite a bit of quality loss in terms of accurate, film-like colors. Hair and faces tend to glow white or even bluish under any bright light, colors are harsh and crushed, and many shots now have an ugly “plastic” look to them that further exacerbates issues with the CGI and other effects shots. Even though many of these shots wouldn’t look photorealistic without effects, the absence of photorealistic images makes the effects shots feel worse on a subconscious level, the plastic glow giving the show a queasy fever-dream veneer.

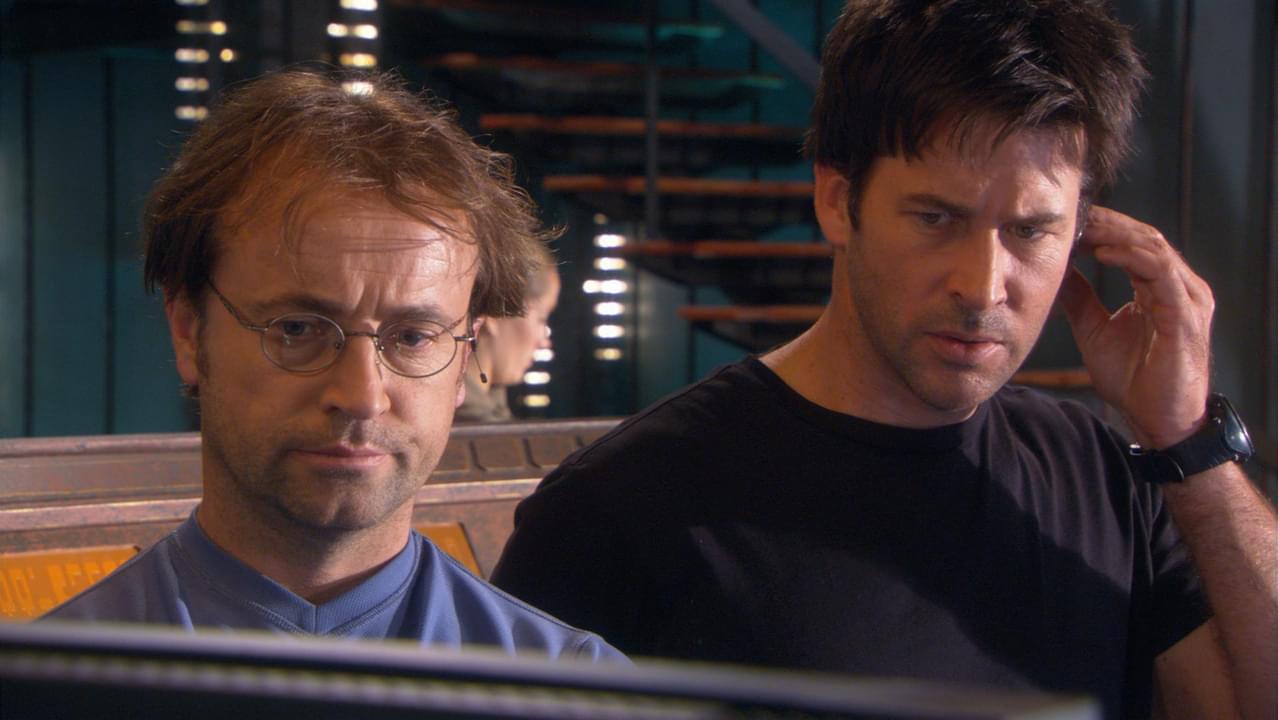

Primarily, these issues effect shots in uncontrolled outdoor lighting. However, SG-1’s sister show, Stargate Atlantis, is frequently affected by lighting issues even when shooting indoors.

I’m not sure what the issue here was. Perhaps it can be chalked up to different crews and the producers having their primary focus on the last season of SG-1, airing around the same time. However, I’d also point to the Atlantis crew’s unwillingness to compromise on the shots. The SG-1 outtakes I’ve used as examples are generally exceptions — what the show looks like when it looks noticeably bad. The weird plastic-like appearance of HD remains on all the shots, but they usually managed to avoid too many issues with the highlights.

I don’t have firm figures on how much the filming changed due to HD, but it’s pretty indicative that I had to look through quite a few episodes of SG-1 before I could find the kind of shot I was thinking about. Many episodes from the last three seasons are shot entirely inside with carefully controlled lighting, on some combination of the Stargate Command set and a few others. As I remember it, this marked a clear shift from the kind of “new planet” episode openers many of the earlier seasons depended on.

Even when shots in the later seasons of SG-1 are clearly outside, they tend to be in places where the characters are in shadow, for example in the ubiquitous forest scenes, inside a warehouse, or on the shadowed side of a building or mountain. Anything to control the bright highlights! In other scenes, heavy lighting was used to blend the scene together better and give it a unified aesthetic, removing any harsh glows.

Quick trivia question: what do Attack of the Clones, Stargate SG-1, and Battlestar Galactica all have in common? Answer: they were all shot on the same camera, the Sony F900 HD series. This was an early professional HD camera which was pretty readily available and affordable even for TV shows. It suffers from a number of issues, including obviously the dynamic range issue, mentioned above, but also the fact that anyone working with HD video this early was most likely shooting in the same gamma (e.g. Rec. 709) as the final product, instead of shooting with a log curve as more modern productions would. Even if the image sensor in the F900 had been fantastic, shooting this way would probably have crushed quite a bit of the original detail out of the image, in a way that couldn’t then be recovered in post-production.

To be clear, the F900 wasn’t unique in having these problems, they were largely a side-effect of being an early adopter of HD acquisition. That said, while many episodes of SG-1 took care to avoid putting the worst aspects of HD video on clear display, Attack of the Clones and Galactica didn’t work with these limitations, and the results are quite frequently terrible.

The dated CGI of Attack is often credited for giving the film its plastic look, and that’s certainly contributing given the number of shots that are almost entirely greenscreened, but as you can see from examining these shots closely, the lighting issues at play with this early HD camera is another significant factor.

But now of course we come to Battlestar Galactica, which more than any other show I can think of illustrates the pitfalls of shooting with HD video cameras.

Frankly, Battlestar Galactica is so bad it seems deliberate. Almost every single shot in the show looks like this, it’s borderline unwatchable.

Unfortunately, it does in fact seem to be the case that some of this was intended, as part of the creators playing with what to them was a new format. An article on the use of HD2 in Star Trek Enterprise and Battlestar Galactica describes the difficulties that the creators of Galactica faced when they tried to achieve the right aethestic for the show, which was “grungy”, “gritty”, with pushed grain. To try to get this result on a TV shooting schedule, DP Steve McNutt developed a realtime color management process for adjusting color on set while using little, if any, post-production color correction.

As part of an attempt to replicate a “documentary-style”, shot-on-film look, McNutt “[pushed] for video noise to emulate grain”, sometimes as high as +18dB. The visual effects supervisor, Gary Hutzel, says that the DP “pushed gamma, crushing the blacks, clipping the highlights”. That’s extremely telling. It’s not worthwhile to try to parcel out blame, in hindsight, for a show shot almost 20 years ago; what’s important however is how the specific limitations of shooting in HD forced creators to experiment with novel techniques to try to get the specific looks they wanted to achieve, with expectations often keyed to the behavior and appearance of the film stock they were used to working with.

Even with the F900, Battlestar Galactica didn’t have to look as bad as it did, but it’s easy to see how seeking the intensity and immediacy of a grainy film stock led too many directors astray.

You might be thinking of the Battlestar Galactica mini-series that aired the year before right now, and wondering why it looked so good. Indeed, it does look fantastic. It was shot on 35mm film.

Now you might recall that the Battlestar Galactica miniseries is available on Blu-ray. And it’s not upscaled — it looks great. So if everyone was already shooting on 35mm film before HD video took hold, why not just continue doing so when the networks wanted HD?

It turns out that most producers would have liked to stay on film, but were forced to change due to budgetary demands. For example, SG-1 producer John Lenic said (speaking about follow-up show Stargate Universe):1

Film still is from a look perspective and in my opinion, the best looking format to shoot on. … The only reason that we didn’t go film is financial. Film still is roughly $18,000 more per episode than digital as you have all the developing and transferring of the film to do after it is shot.

This transfer cost was something that producers were willing to bear when it seemed to be necessary, but less so when HD promised a sharper image for less money. In particular, continuing to use film when shooting for HD would have meant having to do the transfers in HD as well, along with the same finishing costs required for HD post-production, including the effects shots. Additionally, the “cleaner” image of HD video, lacking in rough grain from film stock, made effects compositing a bit easier to do in HD.

With Battlestar Galactica as well, director Michael Rymer initially opposed2 shooting with HD cameras. Here too, the reasons were financial, as it wouldn’t have been possible to produce the show at all if it were shot on film. In the previously cited article, Rymer indicates that he noticed many of the issues that I have pointed out. In particular, he says “the video was picking up the fluorescent bars of various consoles on our [spaceship interior] set”, and calls daylight exteriors “less than satisfactory” and “the worst environment for HD”. That said, Rymer did eventually come around to the look of the show on HD, saying that the sensor noise “approximated film grain nicely” and that he could ultimately achieve the desired aesthetic for the show. On this point I have to disagree, but again, we’re looking back with 20 years of hindsight on how badly early HD footage and CGI work has aged.

As it turns out, a ton of productions were shot with the F900 camera, and cameras like it, and the effect on TV production quality was fairly devastating. This isn’t a critique of shooting digitally in general, of course; the technology has vastly improved over the course of the last decade. Some shows, like The Big Bang Theory, are so reliant on internal sets and carefully controlled lighting that the limitations of shooting HD don’t really show. Others, like The Office, make use of the raw harsh lighting to their advantage, creating an alienating and sterile environment that pushes the presence of the camera in your face, achieving a documentary tone that in my opinion Galactica never did.

Once you decide to go with digital acquisition and approximate the final color grade in-camera, there’s basically no hope of an improved release (for home video or otherwise) years down the road. On the other hand, it’s worth pointing out that early seasons of SG-1, because they were shot on film, could be released in HD one day. The difficulty of course is to find someone willing to put up the money to have the original negatives scanned, and possibly recomposite the effects in HD. For very popular shows, like Star Trek: TNG, this has actually been done, but there’s little hope of the same for less popular shows like Stargate.

Here too, then, there are tradeoffs. While we do have HD versions of the later seasons of SG-1 (though only on streaming services, not Blu-ray), we have to deal with the permanent damage to the image quality done by the acquisition method. On the other hand, the earlier seasons have the potential to see beautiful high definition scans, but this will likely never happen because it’s simply too expensive.